Control System PoC

As stated in the previous post, I quickly put together a proof of concept for this to see how it worked. Could use lots of refinement, but it could work!

As stated in the previous post, I quickly put together a proof of concept for this to see how it worked. Could use lots of refinement, but it could work!

With my Master’s thesis recently submitted, I’m taking the opportunity to develop a game concept I’ve been considering for some time. The goal is to create a small proof of concept to test the core mechanics and determine if the gameplay is as engaging as I envision it to be.

The game is a vertical-scrolling shooter that seamlessly blends fast-paced action with slower, more methodical sections, focusing on precise navigation. The success of these slower segments hinges on a unique, dual-touch control system, which is the primary focus of this initial prototype.

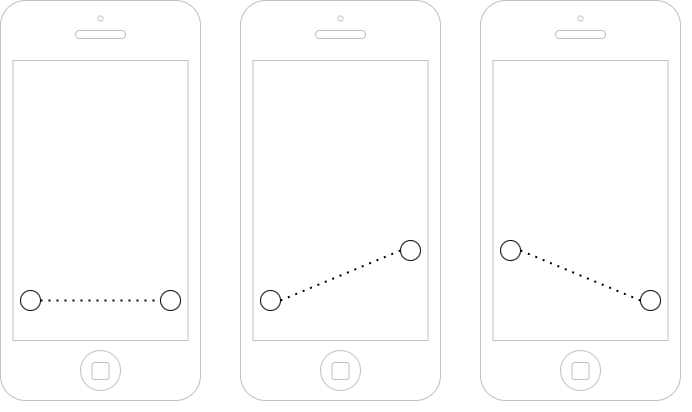

As the wireframe below illustrates, the control scheme utilises two touch points on the screen. The position, distance, and angle of the line connecting these points will directly manipulate the player’s ship. This could be used for navigating through complex environments, aiming a weapon, or controlling a defensive shield.

My immediate objective is to build a functional prototype to test the feel and responsiveness of this control system on a mobile device. I have chosen Unity as the development engine due to its powerful tools and excellent cross-platform support, which will simplify the process of deploying and testing on both iOS and Android devices.

I’m going to develop it in Unity, simply because I have access to iOS and Android devices.

Phew! After months fuelled by code, curiosity, and far too much food, I’ve finally hit ‘submit’ on my Master of Science in Computer Science with Artificial Intelligence dissertation.

While the official results are still under wraps, I’m so excited to finally share the big question that has been at the heart of my work for the past year.

My research explored: “Exploring AI-Driven Personalised Learning Pathways to Support Student Transition from Secondary to Tertiary Education: A Synthetic Data Approach Grounded in Cognitivism and Constructivism”

In simple terms? I wanted to explore using AI to create personalised, supportive roadmaps for students, helping them bridge the often-daunting gap between finishing secondary school and starting university. This project sits right at the crossroads of educational theory and advanced AI—precisely why I decided to pause my Master of Education (MEd) to immerse myself in computer science. The Synthetic approach was the easiest option within the available timeframe and public datasets, and it also ensures privacy for real individuals.

Putting my MEd on hold wasn’t an easy choice, but I knew I needed to understand the “how” behind the technology I was so passionate about. Grappling with neural networks and the realities of building AI systems has given me a toolkit I couldn’t have imagined. It was less of a detour and more of an essential part of the adventure.

With my MSc dissertation submitted, my next step is to resume my MEd, which I put on hold. This is where the two threads of my academic journey can come together. Provided I receive the green light from my institution and lecturers, I plan to integrate my AI dissertation work with my remaining MEd modules. The goal is to translate what I’ve learned into real-world pedagogical frameworks. I’m especially excited to:

Looking even further ahead, a PhD has always been on the roadmap—if the stars align with the right timing, funding, and project! My dream would be to take this work to the next level by:

Completing this dissertation is a significant milestone, but it feels more like a waypoint than a final destination. If this MSc has taught me anything, it’s that the lines between different fields are invitations to collaborate, not walls to keep us apart. Bridging this gap of siloed knowledge within tertiary education is becoming critical for the industry, and especially with the growth of AI systems permeating throughout all knowledge fields.

By blending the worlds of AI and pedagogy, I aim to contribute to the creation of learning environments where technology truly supports people’s flourishing.

A huge thank you to all the colleagues, mentors, friends and family who have encouraged (and occasionally endured!) my cross-disciplinary journey. I’m eager to share my full results with you all—and to begin addressing the next set of questions.

Stay tuned for more updates once the final result is in the rear-view mirror!

Some planning and concepts on the newly restructured BSc Hons Applied Software Development, which I have the pleasure of being one of the programme leads on.

Posted by Intagrate Lite